This article briefly describes our successful experience of implementation “tracking of moving objects” algorithm as a part of intelligent video analysis solution. It contains high level instructions how to “convert” a video stream into a list of tracks of moving objects.

Introduction to motion recognition

Motion recognition is very important in automated surveillance systems. In this article we propose a novel approach to implement a complete framework that allows real-time detection and motion-based tracking of moving objects in a video stream acquired by a stationary camera. Motion detection is the process of recognition of moving objects (particularly people) on the video source (stream of frames). It has many application areas:

- Surveillance systems;

- Security cameras;

- Automated lighting control;

- Laser gestures recognition;

- Detecting comets or asteroids;

- Detecting any type of motion and making camera shots.

Motion detection algorithm

As an input, we receive a stream of frames (images) captured from a video source (for example, from a video file or a web camera). The algorithm should gather information about moving objects (size, trajectory, etc.).

In our approach, we decompose an original problem into several smaller sub problems:

- Motion detection;

- Blob analysis;

- Matching moving objects between consecutive frames.

Let’s consider each part separately from each other.

Motion Detection Approach

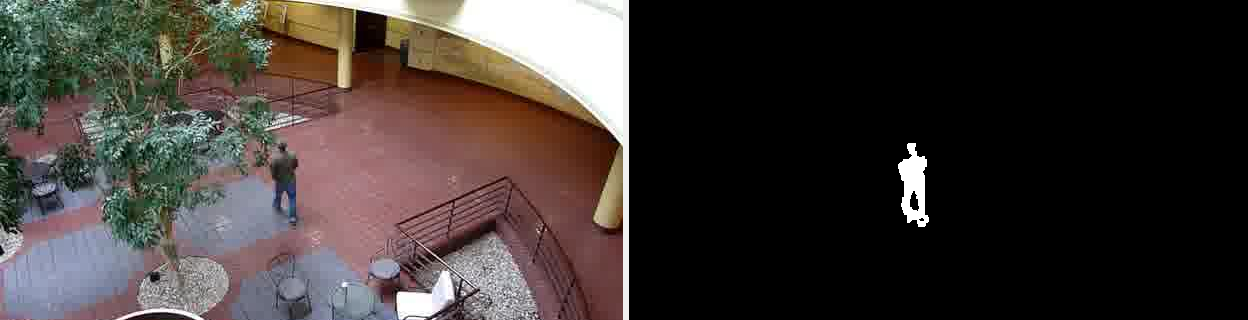

There are several approaches to motion detection. Most of them are based on comparing a frame of the video with the next or previous frame (or with the background). In our approach, we use an original background subtraction algorithm. Before subtracting a current frame from the background, we perform a gray scaling of it (Figure 1). Different video sources might have different format of frames. Thus, we have decided to work with gray-scaled frames.

We separate motion detection part into two steps.

The first step identifies moving objects relying on the difference between the current frame and the background. The background frame is updated regularly once per K frames (where K – integer number). To update the background frame, the algorithm makes two steps:

- Calculates the difference between the current frame and the current background frame;

- If the difference for particular pixel is greater than 0 (zero), then the correspondent pixel in the background frame becomes more solid (whiter). Otherwise – weaker (blacker).

Actually, motion detection part works per pixel too:

- Calculates the difference between each pixel;

- Marks pixels with difference greater than the specified threshold with a foreground color – white. All other pixels are marked in black.

Therefore, an output of the first step will be a binary frame with only two colors: white and black (Figure 2). Let’s call it – foreground mask.

Figure 2. Foreground mask

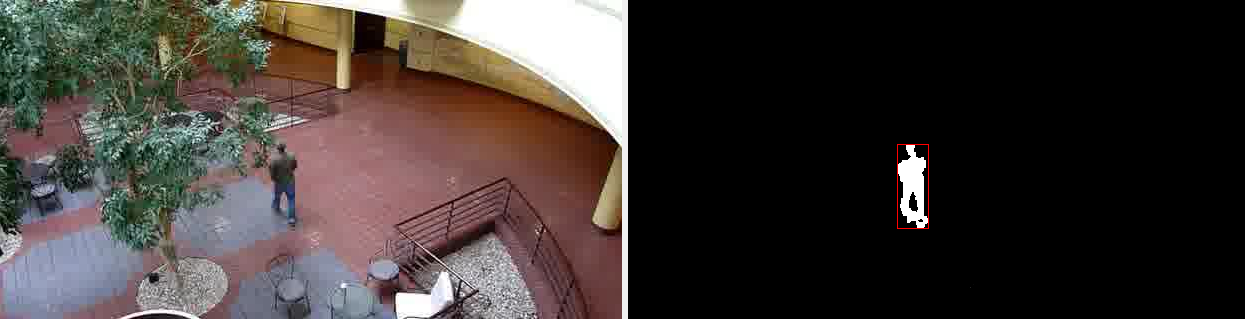

The second step applies morphological operations to the resulting foreground mask to eliminate noise. For this purpose we use an erosion filter. The erosion filter removes pixels that are not surrounded by enough amount of adjacent pixels. The foreground mask is processed pixel by pixel and, if an enough amount of pixels in the current window (we uses 3×3 window) are not white, then the entire window will be black. Thus, the noise (stand-alone pixels) will be eliminated. Also, to keep object’s edges we apply a dilatation filter to the same 3×3 window. Figure 3 shows the result of the motion detection part – filtered foreground mask.

Figure 3. Filtered foreground mask

Blob Analysis

In this part of the algorithm, we find blobs in the binary image (foreground mask). For image processing, a blob is a region of connected pixels. To solve this problem we use a simple BFS (Breadth-First Search) algorithm. As an output of this part of process, we get a list of objects with following properties:

- ID – an identifier;

- Bounding box – the rectangle that contains all pixels which belong to a blob;

- Centroid – point, the center of a blob.

Let’s call this object – track. Thus, each track will correspond exactly to one blob and vice versa.

Before adding a blob to the result list, we check, if its size satisfies our expectation.

Figure 4 shows the visualization of the foreground mask after the blob analysis.

Here is the result of running our Blob Analysis algorithm on a video stream – motion detection and tracking in real-time:

We make Bounding boxes red for visualization, but any action can be applied to tracks and recognized objects.

Matching Moving Objects between Consecutive Frames

This part is the most important and hardest. It’s difficult to match moving objects between adjacent frames because:

- the path (trajectory) of blobs might be intersected;

- the blobs might be very close to each other (like two walking people holding their hands);

- of the interference (trees, buildings, etc.).

The association of detection (blobs) to the same object (track) is based on motion. We use Kalman filter to estimate the motion of each track. The filter is used to predict the location of a track in each frame.

During the processing of a frame, some blobs may be assigned to tracks while other blobs and tracks may stay unassigned. So let’s consider different cases for blobs and tracks:

- A blob is assigned to a track. Then we have to update track’s information (size, centroid, etc.);

- A track is unassigned. Mark this track as invisible.

- A blob is unassigned. Begin new track with this blob’s data.

Each track has an amount of invisible (marked as invisible) consecutive frames. If its amount exceeds a specified number, then the algorithm deletes the track.

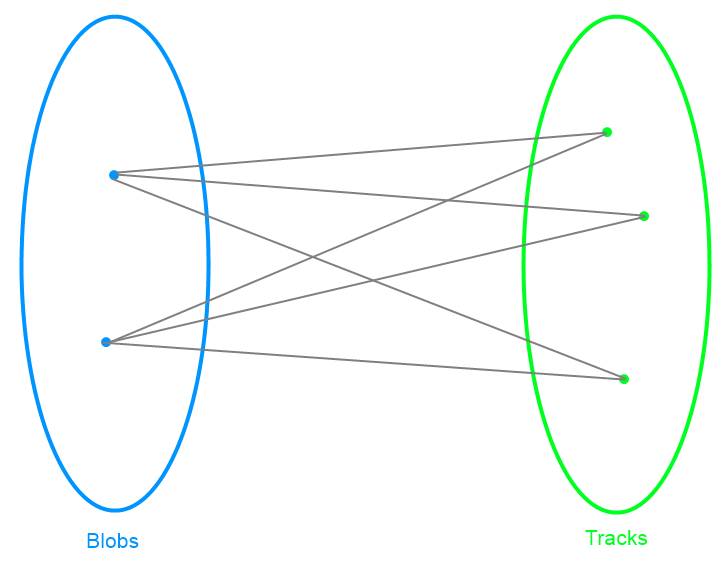

In this part of the process, the well-known problem appears – assignment problem (blobs to tracks). First, we build a bipartite graph: we have a source S, a sink T, in the first part we place N vertices (corresponding to blobs), in the second – M vertices (corresponding to tracks). Each blob has an edge to each track and vice versa. The weight of the edge is a distance between centroids of a blob and a track.

Schematically, it looks like on the Figure 5.

Figure 5. Bipartite graph – blobs to tracks

Then we use the min-cost-flow algorithm to solve the current problem. The assignment problem can be solved by another well-known algorithm –Hungarian algorithm (aka Kuhn–Munkres algorithm).

For each track, we keep count of the number of consecutive frames, where it remained unassigned. If the count exceeds a specified threshold we assume that the object left the field of view and delete the track.

Figure 6. Matching moving object between consecutive frames

Collected information allows us to analyze trajectories of moving objects (Figure 7).

Figure 7. Trajectory of moving object

Other cases for algorithm application

These algorithms might be useful in many other applications. For example, we can use a part of our algorithms:

- to recognize gestures on the video. Let us suppose we have a camera which monitors an area. We may create an application that, when somebody gets into that area and makes some hands gestures in front of the camera, should detect the type of the gesture and show some information on the screen. We can implement application that will control some sort of device via gestures;

- to find out the direction of the moving object;

- to detect movements in specific areas.

Here is an example of running Matching Moving Objects between Consecutive Frames on the video stream.

Motion recognition article summary

In this article we made an overview of our algorithm used to detect motion and keep tracking of moving objects on the video stream.

Taras Zubyk, Mike Makarov

Hi,

It is very informative .

Can we have the source codes for linux environment to play with it ?

thx