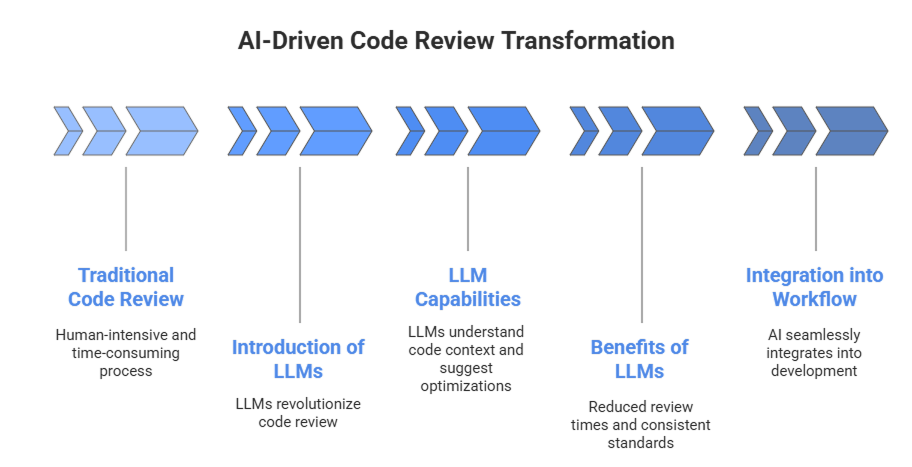

Code review – the bedrock of software quality – is undergoing a monumental shift. What was once a human-intensive, often time-consuming process is now being revolutionized by Large Language Models (LLMs). This isn’t just about catching typos; it’s about a new era where AI deeply understands code context, suggests intelligent optimizations, and dramatically accelerates your development cycle.

Traditional code linters offer rule-based syntax checks, but LLMs go further. Trained on vast datasets of code and text, they grasp programming concepts, foresee potential side effects, and propose improvements aligned with your entire codebase]. The benefits are compelling: review cycle times plummet from days to minutes, senior engineers are freed from mundane tasks, and coding standards are enforced with unwavering consistency. Plus, in today’s diverse tech stacks, a single LLM can maintain review standards across Python, Java, JavaScript, C#, and more, breaking down language-specific silos within teams.

This guide dives into the evolving landscape of AI code review tools, comparing leading solutions, their real-world performance, and crucial cost implications. We’ll also explore best practices and key challenges to help you integrate AI seamlessly into your development workflow.

Comparing the AI Code Review Landscape

The effectiveness of an AI code review tool often boils down to its understanding of context. Does it see just the isolated change (a “diff”), or does it grasp the entire project’s architecture? This distinction creates a spectrum of tools:

- Diff-Aware (Level 1): Focus on the immediate code changes.

- Repo-Aware (Level 2): Utilize techniques like Retrieval-Augmented Generation (RAG) to pull in relevant information from the entire repository, offering more contextual suggestions].

Let’s explore the leading solutions from major tech companies and the open-source community, focusing on user feedback, real-world performance, and cost.

Platform-Native Solutions from LLM Creators

These tools are built directly into major development platforms, offering seamless integration. They are often the first choice for teams invested in these ecosystems.

As the incumbent AI coding assistant from Microsoft’s GitHub, Copilot has expanded its capabilities into the pull request (PR) process. It can be assigned as a reviewer, where it will generate a summary and post comments. However, it is designed as a non-blocking assistant and will never “approve” or “request changes.”

- User Feedback & Performance: The consensus among developers is that Copilot’s PR review feature is best used as a “first-pass” tool. It excels at catching “mechanical” errors, minor logic flaws, and stylistic nits. However, many users report that its suggestions can be superficial and that it often misses the “bigger picture” compared to more specialized tools. One user noted, “To this date the built in copilot on review has never returned a usable suggestion.” This highlights its primary strength as an enhanced linter rather than a deep architectural reviewer.

- Cost: The code review feature is included in paid plans like Copilot Pro ($10/user/month) and Business ($19/user/month), which come with a monthly quota for full PR reviews.

Google’s offering integrates into GitHub as a bot (gemini-code-assist[bot]) that can be automatically added as a reviewer. It generates PR summaries and provides in-depth reviews with comments categorized by severity (Critical, High, Medium, Low). A key feature is its interactivity; developers can manually invoke it at any time by adding a /gemini tag to a PR comment.

- User Feedback & Performance: As a direct competitor to Copilot, Gemini Code Assist leverages Google’s powerful models. Its ability to reference user-provided style guides offers a degree of customizability that can lead to more relevant feedback. The structured, severity-ranked comments help teams prioritize which issues to address first.

- Cost: Gemini Code Assist is part of the Google Cloud subscription, making it an attractive option for teams already utilizing the GCP ecosystem.

Anthropic’s Claude Code (as an Agentic Reviewer)

While not a pre-packaged “bot” in the same way as other tools, Claude Code’s power lies in its agentic capabilities. It’s a low-level, unopinionated tool that can be scripted to perform complex, multi-step tasks across an entire codebase, including sophisticated code reviews. This approach moves beyond simple PR comments to create a true AI collaborator.

- User Feedback & Performance: Users leverage Claude Code by creating custom agentic workflows. A powerful pattern for review involves using one Claude instance to write code and a second, separate instance to review that code, preventing context contamination and bias. This allows for a more objective and thorough analysis than single-pass tools. Users report transformative productivity gains, with some rebuilding entire applications in hours instead of weeks. In direct comparisons, Claude has been noted for its strength in handling integrated frontend and backend changes, often requiring fewer iterations to get to the correct solution.

- Cost: Pricing is based on direct API usage. This provides flexibility but can be costly for frequent, complex reviews. One user reported a cost of $4.69 for three simple changes, highlighting that per-change pricing can add up quickly.

Specialized and Open-Source Tools

These tools often focus on solving specific problems or providing maximum flexibility.

Qodo is a prime example of a “Repo-Aware” (Level 2) tool. It uses Retrieval-Augmented Generation (RAG) to index the entire repository, allowing it to provide context-aware suggestions that align with existing project patterns.

- User Feedback & Performance: Users praise Qodo for its ability to understand the codebase’s context, leading to smarter suggestions. Its standout features include validating that PRs meet the requirements of an associated ticket and generating unit tests for the new changes. A study on an open-source version of Qodo found that while it enhanced bug detection and promoted best practices, it also increased the average PR closure time due to the volume of feedback.

- Cost: Qodo offers a generous free Developer tier based on a monthly credit system (250 credits/month). Paid plans for Teams ($30/user/month) and Enterprise ($45/user/month) offer more credits and features like on-premise hosting. Most operations cost 1 credit, but premium models like Claude Opus cost 5 credits per request.

This open-source, Python-based CLI tool is designed for flexibility. Its main strength is that it is model-agnostic, with built-in support for multiple LLM backends, including OpenAI, Azure OpenAI, and DeepSeek.

- User Feedback & Performance: As a CLI tool, Codedog is praised for its easy integration into any CI/CD pipeline. It can generate summaries for PRs and individual files and provides a scoring system for readability and maintainability. Its flexibility makes it a strong choice for teams that want to experiment with different models or build a custom review process.

- Cost: Codedog is open-source and free to use, with costs being determined by the API usage of the chosen LLM backend.

Insights from Enterprise-Scale Implementations

Large tech companies have built their own internal tools, offering a blueprint for what makes an AI review system effective at scale.

ByteDance’s BitsAI-CR: Used by over 12,000 developers, BitsAI-CR achieves a high precision rate of 75% by using a two-stage pipeline. A RuleChecker LLM first identifies potential issues, and a second ReviewFilter LLM verifies the findings to reduce false positives. Crucially, it uses a

data flywheel for continuous improvement, tracking not just user “likes” but also an “Outdated Rate” metric that measures if developers actually act on the AI’s suggestions. This demonstrates that mature systems must prioritize precision and develop metrics that measure real-world impact.

Google’s Critique: Google’s internal review system and engineering practices emphasize that the goal of code review is the continuous improvement of overall code health, not the pursuit of perfection. Their system has achieved 97% engineer satisfaction by augmenting, not replacing, human reviewers. This philosophy – using AI to handle routine checks while freeing up humans for complex architectural decisions – is a guiding principle for successful implementation.

Tab 1: Cost and Feature Comparison

| Tool/Platform | Key Feature | Typical Build Server Integration | Pricing Model |

| GitHub Copilot | Seamless GitHub integration; good for syntax and style. 6 | Parallel to build server (via SCM webhook). | Subscription: Pro plan is $10/user/month. 9 |

| Gemini Code Assist | Deep integration with GCP; severity-ranked comments. 10 | Parallel to build server (via SCM webhook). | Part of Google Cloud subscription. |

| Claude Code (Agentic) | Full codebase awareness; scriptable agentic workflows. 11 | Full Custom Script orchestration. | Per-API call usage (e.g., one user reported $4.69 for three changes). 14 |

| Qodo | Repo-aware (RAG) context; test generation. 4 | Parallel to build server (via SCM webhook). | Credit-based: Free dev tier (250 credits/mo); Teams plan is $30/user/mo (2,500 credits). 18 |

| Codedog | Open-source and model-agnostic (OpenAI, DeepSeek, etc.). 19 | In-Pipeline CLI execution. | Open Source (Free); cost is based on LLM API usage. |

Architectural Patterns for Build Server Integration

Integrating an LLM reviewer with a build server is less about finding a specific plugin and more about choosing the right architectural pattern. Modern CI/CD platforms like Jenkins, GitHub Actions, GitLab CI/CD, CircleCI, and Azure DevOps excel as flexible orchestrators, capable of integrating any tool through their core functionalities like executing scripts and managing credentials. Let’s take a closer look at the two primary patterns for this integration.

The SaaS Webhook Approach (CodeRabbit, Qodo, GitHub Copilot, Gemini)

This is the simplest and most common pattern. The AI review tool is a managed SaaS product that integrates directly with your source code platform (e.g., GitHub) via an app or webhook. 27 When a PR is created, the SCM platform triggers both the build server pipeline and the AI reviewer in parallel. The two systems run independently, and their results appear as separate checks on the PR. This requires minimal configuration within the build server itself, as its role remains focused on traditional CI tasks like building and testing.

The In-Pipeline CLI Approach (Codedog, reviewdog)

This pattern offers more direct control by running a command-line tool as a stage within the build server pipeline. This is ideal for open-source tools or custom scripts. The pipeline can be configured to run the AI review at a specific point, for example, only after all unit tests have passed. This gives the pipeline author explicit control over the workflow but requires that the necessary CLI tools and dependencies are installed on the build agents.

Strategic Best Practices and Core Challenges

Successfully implementing an AI reviewer requires a strategic approach that acknowledges the technology’s limitations.

Best Practices for Success

- Start as an Assistant, Not a Gatekeeper: To build trust, introduce the tool as a non-blocking assistant. Its feedback should be posted as comments or suggestions, not as a hard gate that prevents a merge.This frames the AI as a helpful collaborator, allowing developers to benefit from its speed while retaining the autonomy to override its suggestions.

- Establish a Feedback Loop: The most effective systems learn over time. Teams should establish a process for collecting feedback on the AI’s suggestions (both good and bad). This qualitative data can be used to refine prompts and configurations, transforming the tool from a static utility into a system that adapts to your team’s specific codebase.

- Master the Prompt: The quality of an LLM’s output is directly tied to the quality of its input. Vague prompts yield vague reviews. Effective prompting involves giving the AI a clear persona (e.g., “You are an expert Go developer focused on security”) and specific instructions on the task and desired output format.

Navigating the Inherent Challenges

While AI code review is a game-changer, it’s not a silver bullet. Every successful implementation must acknowledge and plan for the technology’s core limitations.

- The Context Blind Spot: This remains the most significant challenge. An LLM analyzing an isolated code change may not understand the system-wide architecture. For example, it might flag a missing authorization check in an API endpoint, not knowing that authorization is handled globally by middleware. This is why human oversight is critical for validating changes against broader architectural principles.

- Hallucinations and Incorrectness: LLMs are probabilistic and can generate “hallucinations” – plausible but incorrect suggestions. They might suggest a non-existent library function or propose a “fix” that introduces a new bug. Every AI suggestion, especially those touching complex logic, must be critically evaluated by a human developer.

- Security and Privacy: Using third-party SaaS tools means sending proprietary code outside your organization’s direct control. While leading vendors have strong security certifications (e.g., SOC2) and data privacy policies, this may not be acceptable for all companies. Organizations in highly regulated industries may need to prioritize on-premise solutions or custom integrations with models hosted in their own cloud environment.

Final Thoughts,

AI-powered code review is no longer experimental – it’s becoming a practical way to accelerate development while improving quality. When used wisely, these tools reduce review cycles from days to hours, enforce consistency across tech stacks, and free senior engineers from repetitive checks.

But the benefits depend on how you implement them. The most effective setups start small – rolling out AI as a non-blocking assistant – and evolve with feedback loops, strong prompts, and repo-aware tools that understand context. Human oversight remains critical, especially for security, architecture, and business logic.

The takeaway: treat AI review like a strategic capability, not a shortcut. With the right tools, process design, and governance, teams can balance speed with reliability and unlock real ROI.

Let Us Help You Navigate the Future of Code Review

If you’re looking to set up a robust, AI-augmented code review process as part of your software development lifecycle, Developex experts can help. Contact us to learn how we can tailor a solution that fits your team’s unique needs and accelerates your development goals.