You’ve integrated a powerful Large Language Model (LLM) into your product. The demos were impressive – fluid, human-like conversations that wowed your team. Yet in real-world use, the experience falls short. Users report that the AI forgets important information, can’t access project data, and ultimately fails to deliver meaningful value. Suddenly, your “intelligent” feature feels surprisingly dumb.

This isn’t a failure of the LLM itself – it’s a failure of context. LLMs excel in isolated tasks, but they struggle when disconnected from the proprietary data and tools your users rely on. The result? Stalled innovation, poor user experience, and low ROI on your AI investment.

This is the context crisis – the biggest roadblock to next-generation AI applications. And the solution isn’t simply a bigger model; it’s a smarter bridge. Enter the Model Context Protocol (MCP). MCP is a strategic, open standard designed to connect LLMs with the real-world context they need to deliver true business value.

This report will guide product owners through what MCP is, its immense strategic value, real-world examples of its growing adoption, and a pragmatic guide to navigating the implementation complexities from a business perspective.

- The Context Crisis: Why Even the Smartest LLMs Hit a Wall

- Introducing the Model Context Protocol (MCP): A “USB-C Port” for Your AI

- The Strategic Value of MCP: Why It Belongs on Your AI Roadmap

- The Strategic Advantage: MCP Server vs. Internal LLM Integrations (Middleware & Custom APIs)

- Real-World MCP Integration Scenarios: From CRM to Document Management

- A Product Owner's Guide to MCP Implementation: Navigating the Technical Landscape

- Conclusion: From Isolated AI to Integrated Agents

The Context Crisis: Why Even the Smartest LLMs Hit a Wall

Before exploring how MCP solves the problem, it’s crucial to understand why even the most advanced Large Language Models (LLMs) often fail in real-world products. These aren’t rare bugs – they are inherent limitations of current AI technology that directly affect user experience, trust, and business outcomes.

The “Amnesia” Problem: Context Window Limits

Every LLM operates within a context window – a fixed amount of text (measured in tokens) the model can consider when generating responses. Even the latest models with massive windows, sometimes exceeding 100,000 tokens, have no infinite memory. Once a conversation surpasses this limit, earlier parts are dropped, regardless of their importance.

This leads to what can be called Context Degradation Syndrome. A common example is the “Lost in the Middle” problem: LLMs tend to recall the start and end of a conversation well but often forget critical details buried in the middle.

For products, the impact is tangible:

- Customer support AI may repeatedly ask for the same account information.

- Code assistants may forget requirements mentioned earlier in a session.

The result is a repetitive, frustrating experience that erodes confidence in your AI feature and can drive users away.

The “Noise” Problem: Irrelevant Data and Distraction

It’s not just about the amount of context – it’s also about quality. Feeding an entire conversation history into an LLM without filtering introduces noise: tangents, corrections, or casual chatter that distracts the model from the user’s current goal.

From a product perspective, noise causes unpredictable behavior:

- Marketing AI might generate off-brand content.

- Financial tools could produce flawed insights by latching onto irrelevant earlier data.

This unpredictability directly undermines user trust, the most critical asset for any AI-powered product.

The “Isolation” Problem: Disconnected from Live Data

The most significant limitation is that LLMs are fundamentally isolated. They rely on historical datasets and cannot access real-time, proprietary data or perform actions in other systems by default.

Without this connection, an LLM can:

- Summarize what a support ticket should contain – but cannot create it.

- Describe a sales strategy – but cannot execute the first step.

This isolation is why many enterprise AI initiatives stall. LLMs alone cannot become true business workhorses without a mechanism to bridge the gap between static knowledge and live, actionable data.

Introducing the Model Context Protocol (MCP): A “USB-C Port” for Your AI

The chaos of custom integrations and the inherent limitations of isolated LLMs demand a new approach. The Model Context Protocol (MCP) provides an elegant, standardized solution. Developed by Anthropic, MCP defines an open protocol that standardizes how applications provide context to – and request actions from – LLMs.

MCP Explained: The “USB-C for AI” Analogy

Think of MCP as the USB-C port for artificial intelligence. Before USB-C, every device required its own unique charger or cable. It was confusing, inefficient, and prone to failure. USB-C created a universal standard. MCP does the same for AI: instead of fragile, one-off integrations for each API and data source, product teams can use a single, standardized language to connect their AI to multiple tools and datasets seamlessly.

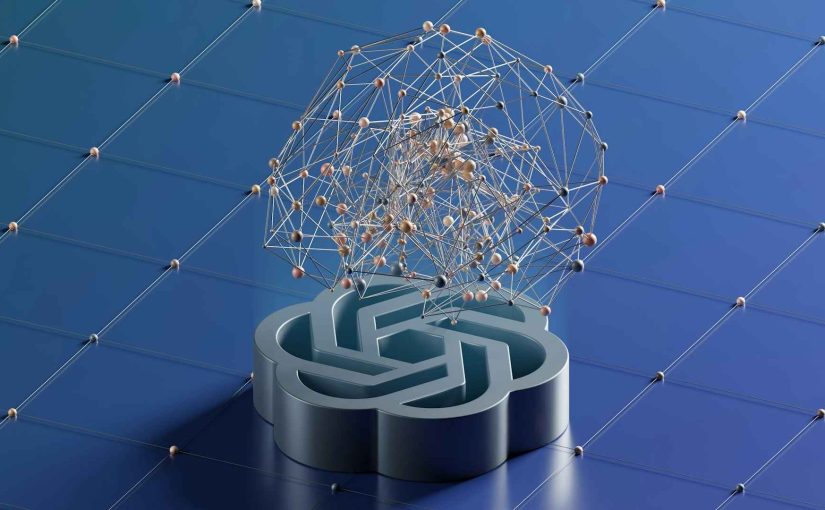

How MCP Architecture Works: Client, Server, and Host

At its core, MCP uses a client-server architecture that decouples the AI application from the tools it uses. This is a critical architectural advantage. It means that adding a new capability to your AI no longer requires a complex, core-product development cycle. Instead, it becomes as simple as “plugging in” a new component. The key players in this architecture are:

- MCP Host: This is your application – your SaaS platform, your mobile app, your code editor – where the user interacts with the LLM.

- MCP Client: This is a component that lives inside your Host application. It is the “USB-C port” itself, responsible for speaking the MCP language and managing connections to various servers.

- MCP Server: These are the “peripherals” or “adapters.” Each server is a lightweight, independent program that exposes a specific capability. One server might connect to a GitHub repository, another to a PostgreSQL database, and a third to your company’s internal CRM via its API. These servers can run locally on a user’s machine or remotely as a third-party service.

This architecture fundamentally changes the strategic planning and agility of a product roadmap. A product owner can now think of adding new AI skills not as a major feature release requiring a full deployment, but as a minor, incremental update that involves connecting a new, pre-built server.

The Building Blocks of Action: Prompts, Resources, and Tools

MCP standardizes the interaction between the client and server around three core primitives, which give the LLM the ability to both reason and act:

- Resources: These grant the LLM read-only access to contextual data. Think of them as GET requests. Examples include “Read the contents of this file,” “Get the user’s purchase history from the database,” or “Fetch the latest comments on this Jira ticket.”

- Tools: These empower the LLM to take action or write data. Think of them as POST or PUT requests. Examples include “Create a new file,” “Send a message to the #engineering Slack channel,” or “Update the customer’s status to ‘resolved’ in the CRM.”

- Prompts: These are reusable templates or instructions that guide the LLM’s behavior for specific, recurring tasks, ensuring consistency and reliability.

This combination of readable resources and executable tools is what allows an LLM to move beyond simple conversation and begin performing meaningful work.

The Strategic Value of MCP: Why It Belongs on Your AI Roadmap

For product leaders, adopting a new technology is less about the tech itself and more about the value it delivers. The Model Context Protocol (MCP) turns technical capabilities into tangible business benefits, making it a must-consider for any enterprise AI strategy.

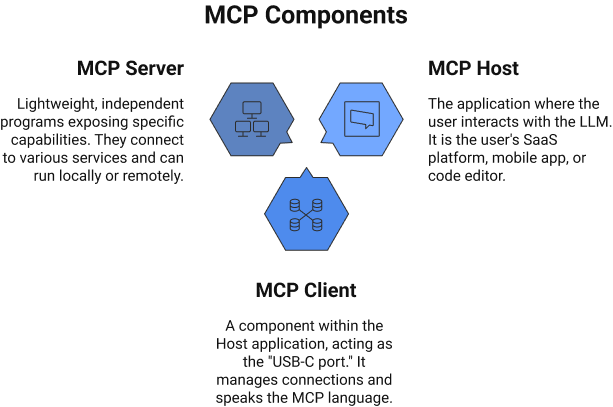

Benefit 1: Radically Accelerate Time-to-Market

Before MCP, connecting an LLM to new APIs or data sources meant custom development for every combination – a classic “N × M” integration problem. This approach is slow, expensive, and hard to scale.

MCP’s plug-and-play model solves this. With a growing ecosystem of pre-built MCP servers for common integrations like Slack, GitHub, or Stripe, development teams can:

- Avoid reinventing the wheel

- Reduce custom code

- Shorten development cycles

The result: faster delivery of AI-powered features and accelerated time-to-market.

Benefit 2: Build Autonomous AI Agents, Not Just Chatbots

MCP provides the “nervous system” for autonomous AI agents – systems that can plan and execute complex, multi-step tasks without constant human intervention. While a chatbot answers questions, an agent completes workflows.

For example, an Automated Contract Analyst in legal tech could:

- Access newly uploaded contracts via a File System server

- Extract key terms and cross-reference company policies using a PostgreSQL server

- Flag non-compliant clauses

- Post a summary and link to the legal team’s Slack channel

Such high-value automation is impossible with isolated LLMs but becomes straightforward with MCP. The focus for product teams shifts from low-level integration plumbing to workflow design and user experience optimization, giving a competitive edge.

Benefit 3: De-Risk and Future-Proof Your AI Strategy

Vendor lock-in is a major concern for long-term AI investments. MCP, as an open protocol, mitigates this risk. Teams can:

- Build integrations against MCP once

- Swap underlying LLMs (e.g., Anthropic Claude, OpenAI GPT-4, or future open-source models) without rewriting integrations

This flexibility ensures your enterprise AI strategy remains agile, cost-effective, and future-proof, always leveraging the best available models for your business needs.

MCP in Action: A Look at the Growing Ecosystem

MCP is not just a theoretical concept; it is a rapidly maturing standard with real-world adoption, particularly within the developer community – a classic leading indicator of broader enterprise adoption. When developers embrace a protocol to automate their own complex workflows, it signals its power and utility. The tools they build for themselves often become the foundational blocks for wider business process automation.

The Strategic Advantage: MCP Server vs. Internal LLM Integrations (Middleware & Custom APIs)

For a product owner building AI features, a critical architectural question arises: “Why build an MCP server for my product when I can just call an LLM API directly from my backend, perhaps through a centralized middleware layer?” This is an excellent question, as a well-designed internal middleware (or “LLM Gateway”) can indeed solve many operational challenges like managing session memory, A/B testing prompts, switching between LLM vendors, and handling cost and privacy controls for your own application’s use.

The key difference lies in the strategic purpose: an LLM middleware is an internal-facing solution to make your own application a better consumer of AI, while an MCP server is an external-facing solution that turns your application into a standardized tool for the entire AI ecosystem.

| Feature | LLM Middleware / Custom API | MCP Server |

| Primary Goal | Centralize and manage all LLM calls originating from within your own application. | Expose your application’s data and tools to any external AI agent in a standardized way. |

| Who is the “Client”? | Your own application’s frontend or internal services. | Any MCP-compliant application (e.g., Cursor, Claude Desktop, another SaaS product). |

| Key Benefit | Centralized Management: Provides a single point of control for costs, security, and prompt engineering for your internal use cases. | Ecosystem Interoperability: Allows your product to be a “Lego brick” in larger, multi-tool workflows, driving adoption and network effects.13 |

| Architectural Pattern | A “walled garden” approach that optimizes internal AI usage. | An “open platform” approach that prepares your product for the agentic AI era. |

To illustrate the difference, let’s consider a project management SaaS tool:

- Alternative (LLM Middleware/In-App Query): A user is inside your project management web application. They click a button that says “Summarize Project Risks.” Your backend, via its LLM middleware, gathers all the relevant task data, sends it to an LLM, and displays the summary inside your web app. The functionality is useful but locked within your system.

- MCP Server Advantage: A developer is working in their code editor (e.g., Cursor, an MCP Host). After fixing a bug, they tell their AI assistant: “Create a ticket in our project management tool for the fix I just committed, assign it to the QA team, and link it to this commit.” The assistant seamlessly uses your Project Management MCP Server to perform this action without the developer ever leaving their editor. Your tool has just become an active, integrated part of a much larger, more powerful workflow.

In conclusion, while a direct integration or middleware layer can add an AI feature to your product, implementing an MCP server turns your product into a feature for the entire AI ecosystem. It is a strategic choice to embrace interoperability and position your SaaS for the future of agentic AI.

Who is Using MCP? The Hosts and Clients

A growing number of applications, especially those focused on enhancing developer productivity, have integrated MCP clients to connect their users to a world of external tools. Key examples include:

- AI-Native Code Editors & IDEs: This is the most mature category for MCP adoption. These tools integrate AI deeply into the software development workflow, using MCP to give the AI agent access to the entire codebase, version control, and other development tools. Examples include Cursor, Zed, and the Continue extension for VS Code and JetBrains.

- General-Purpose AI Chat Interfaces: These applications act as a central hub where users can interact with an LLM and connect it to a variety of custom MCP servers. Claude Desktop is a well-known example, but a vibrant open-source community has produced alternatives like Open WebUI, LibreChat, and AnythingLLM.

- Enterprise Platforms: Demonstrating its potential beyond developer tools, Microsoft’s Power Platform Test Engine has implemented an MCP server to help generate more accurate and contextually relevant application tests. Similarly, platforms like Workato use MCP to enable enterprise agents to access a wide range of business applications and databases.

The Expanding Universe of Tools: MCP Servers

The ecosystem of available MCP servers is expanding quickly, comprising reference implementations from the protocol’s maintainers, official integrations from companies, and a growing number of community-built servers. This variety showcases the breadth of capabilities that can be unlocked:

- DevOps & Code: GitHub, Git, PostgreSQL.

- Enterprise & Productivity: Stripe, Slack, Google Drive, Linear, Figma.

- Web & Data: Apify, which provides access to thousands of web scraping and automation “Actors”.

- Platform-Specific: JetBrains IDEs, enabling AI to perform actions like setting breakpoints or executing terminal commands.

The following table provides a scannable overview of the landscape, helping a product owner quickly assess the maturity and relevance of the ecosystem to their specific domain.

| Category | MCP Host/Client Examples | Notable MCP Server Integrations |

| IDEs & Code Assistants | Cursor, Zed, Continue | GitHub, JetBrains, PostgreSQL, Git |

| Enterprise & Productivity | Apify, Claude Desktop | Stripe, Google Drive, Linear, Figma |

| Communication & Data | Claude Desktop | Slack, File System |

| Enterprise Platforms | Microsoft Power Platform | Power Apps Test Engine |

Real-World MCP Integration Scenarios: From CRM to Document Management

To understand the true value of MCP, consider how it transforms common enterprise systems. Each scenario moves AI from a simple chatbot to an active participant in business workflows, enabling context-aware, multi-step automation.

1. Customer Relationship Management (CRM)

User Experience:

A sales manager works in Slack (the MCP Host) and types:

“/sales-ai Give me a summary of our enterprise clients with negative sentiment trends this month.”

The AI agent, via an MCP server connected to Salesforce, retrieves relevant accounts, analyzes unstructured activity logs for sentiment, and responds with a summary card listing three at-risk clients. A “Create follow-up tasks” button allows the agent to automatically schedule tasks in Salesforce for each account owner.

LLM Advantage:

- Traditional CRM automation is rule-based and cannot interpret unstructured text.

- LLM-powered agents detect sentiment from emails or call logs and correctly map inconsistent fields (e.g., “customer_name” vs. “full_name”).

Potential MCP Interfaces:

- Resource: get_contact_history(contact_id) – fetch past emails, calls, tickets

- Tool: update_lead_status(lead_id, new_status) – move a lead through the pipeline

- Tool: create_follow_up_task(contact_id, details) – schedule reminders

2. Learning Management System (LMS)

User Experience:

An instructional designer uses a course authoring tool (MCP Host) and types:

Generate a 10-question multiple-choice quiz based on the ‘Photosynthesis’ module in our Canvas LMS, ensuring two scenario-based questions.

The AI agent accesses the module via an MCP server, understands key concepts, and generates the quiz directly in the interface. Questions can be edited, approved, or regenerated before publishing.

LLM Advantage:

- Traditional LMS authoring requires manual content creation.

- LLMs act as generative partners, creating relevant content while instructors curate and refine.

Potential MCP Interfaces:

- Resource: get_student_progress(student_id, course_id) – retrieve grades and engagement

- Resource: find_course_material(query) – search LMS for relevant content

- Tool: submit_assignment_grade(assignment_id, student_id, grade) – automate grading

3. Project Management System

User Experience:

A developer in VS Code highlights a code block and types:

Create a Jira ticket for this fix, link it to the #support Slack bug report, and commit the changes with a descriptive message.

The AI agent orchestrates multiple MCP servers: Jira for ticket creation, Slack for bug reference, and Git for committing code. A confirmation appears in the IDE:

Jira ticket JIRA-123 created and linked to commit abc123.

LLM Advantage:

- Traditional project management automation is trigger-based and cannot reason across unstructured inputs.

- LLM-powered agents synthesize natural language instructions and code into structured, actionable workflows.

Potential MCP Interfaces:

- Tool: create_task(project, title, description, assignee) – create new issues

- Resource: get_project_timeline(project_name) – fetch milestones and deadlines

- Tool: update_task_status(task_id, new_status) – move a task through its lifecycle

4. Document Management System

User Experience:

An executive types in a chat interface (MCP Host):

Find the final version of the Q3 marketing plan from last year and give me the key takeaways.

The AI agent, via an MCP server connected to Google Drive, performs a semantic search, distinguishes the final version from drafts, and provides a concise, bulleted summary with a link to the source document.

LLM Advantage:

- Traditional keyword search cannot interpret nuance like “final version” vs. “draft.”

- LLM-powered semantic search retrieves the most relevant document and generates summarized insights.

Potential MCP Interfaces:

- Resource: search_documents(query) – find files by content, not just title

- Resource: get_document_summary(file_id) – summarize key points

- Tool: create_document(title, content, folder_id) – draft new documents or meeting notes

A Product Owner’s Guide to MCP Implementation: Navigating the Technical Landscape

With great power comes great responsibility. Adopting MCP unlocks incredible potential, but it also introduces new technical complexities that must be managed from a business and product perspective. A successful implementation requires a pragmatic understanding of the risks and a plan to mitigate them.

Managing Security and Trust – Your AI Risk Register

Adopting MCP fundamentally transforms the security and compliance surface of an AI-powered product. The risk is no longer centralized with the LLM provider; it becomes distributed across an ecosystem of servers that the product team must manage. This necessitates a “zero-trust” approach where every tool integration is treated as a potential security vector until verified.

The Risk of Unintended Actions (Confused Deputy & Command Injection)

An LLM could be tricked by a malicious prompt into using a tool to access data or perform an action for which the user is not authorized. This is known as the “confused deputy” problem and represents a potential compliance and data-breach nightmare.

- Product Owner’s Mitigation Strategy: The product backlog must prioritize features that mitigate this risk. These are not optional add-ons; they are core requirements. This includes implementing user confirmation flows (“The AI wants to execute git push. Do you approve?”), sandboxing local servers to limit their permissions, and ensuring all data passed to a server is rigorously sanitized to prevent command injection vulnerabilities.

The Risk of Malicious Tools (Supply Chain & Tool Injection)

A user could install a malicious third-party MCP server, or a trusted server could be compromised in a future update, injecting malicious code into your product’s ecosystem.

- Product Owner’s Mitigation Strategy: The product needs a clear strategy for vetting and trusting external servers. This could involve creating a curated “marketplace” of verified tools. For the engineering team, it means mandating security best practices as part of the Definition of Done. This includes version pinning for MCP servers to prevent unexpected updates, implementing software composition analysis (SCA) to scan for vulnerabilities in dependencies, and requiring cryptographic code signing for all MCP components your team builds or distributes.

Resource Planning and Total Cost of Ownership (TCO)

The cost of an MCP-enabled feature extends far beyond the LLM API bill. Product owners must plan for the full total cost of ownership.

- Development and Deployment: There is a clear “build vs. buy” decision. Using a pre-built, official server for a common tool like Stripe is fast and efficient. However, building a custom MCP server to connect to your company’s proprietary internal database requires significant, dedicated engineering effort that must be factored into the roadmap and budget.

- The Authentication Imperative: For any serious remote or multi-user application, implementing robust authentication is non-negotiable. Early MCP implementations had limitations, but the protocol now supports standards like OAuth 2.0. Integrating a proper OAuth flow is a critical piece of the implementation puzzle that requires its own development time and expertise.

- Ongoing Maintenance: MCP servers are code, just like any other part of your application stack. They are subject to vulnerabilities and require ongoing maintenance. They must be included in your organization’s standard vulnerability management and software update processes. This represents an operational cost that must be planned for to ensure the long-term security and reliability of the product.

Conclusion: From Isolated AI to Integrated Agents

Isolated LLMs are powerful but limited. The context crisis prevents them from delivering reliable, high-value features. The Model Context Protocol (MCP) changes that, connecting AI to real-world data and tools to enable context-aware, task-completing agents.

For product owners, MCP is a foundational technology that:

- Turns chatbots into autonomous agents

- Unlocks actionable insights and workflows

- Enables scalable, interoperable AI across enterprise systems

The ecosystem is growing, the benefits are clear, and now is the time to plan MCP integration on your product roadmap.

Partner with Developex: Our expertise in Generative AI, NLP, and enterprise AI integration ensures MCP-based solutions are secure, scalable, and aligned with business goals. Schedule a free consultation to explore how MCP can unlock your product’s AI potential.